Personal page of Professor Damiano Brigo at Imperial College London, Dept. of Mathematics

Personal page of Professor Damiano Brigo at Imperial College London, Dept. of Mathematics

Professor (Chair), Stochastic Analysis Group

& co-Head of Mathematical Finance,

Imperial College London

For Citation Data, H-index, Social networks support, etc, please click here

- Site Introduction, and Damiano Brigo's Profile and

short CV

short CV

Research Papers

Research Papers

Seminars and Public Lectures

Seminars and Public Lectures

- PhD and MSc candidates, please click

here

MSc lectures and London Graduate School PhD lectures

MSc lectures and London Graduate School PhD lectures

Randomness, Dynamics and Risk (video)

Randomness, Dynamics and Risk (video)

- Publications, Press, Impact and Networking

(Financial

Modeling, Systems Theory, Probability, Statistics)

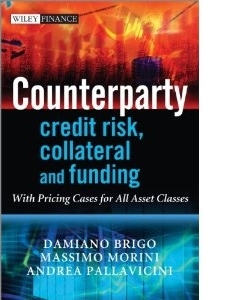

- Books: Interest-Rate Models ,

Credit Models Crisis,

Credit Risk Frontiers ,

Counterparty Credit Risk

,

Counterparty Credit Risk

- Contact Details

___ Welcome! ___

INTRODUCTION

Back to top

Welcome!

Welcome to my web site.

As you can easily see, this page does not conform to the flashing, singing,

animated, pyrotechnic web-pages that you can find nowadays. No need to

"skip intro" here, but in case you may "skip intro" all the same.

Notwithstanding this page somberness and lack of

special effects, here you can find:

- A short profile,

typically used for invited-speaker description at events or author

description in books and conferences.

Thus I hope that if you are interested either in

mathematical finance or in stochastic differential equations in connection with

exponential families and the nonlinear-filtering problem, you will find this

page to be at least a little helpful. The themes treated here concern indeed

financial modelling, probability, systems theory and stochastic geometry from A to Zzzzzzzzzzzzzzz

(where have you heard this one before), so bear

with me....

This page is also an opportunity to convey an

image of mathematicians and financial engineers different from the stereotype

some people have in mind: not all mathematicians and physicists working in

actual or academic finance take themselves too seriously, rather than seriously

enough.

Anyway, enjoy your stay at this little

"professional corner" of mine in the cyberspace.

You can contact me by following this link, and please be patient if I reply after a while or do not reply,

the number of messages I receive is out of control, and goes on top of the huge amount of emails

I receive in my professional accounts.

Arrivederci!

Back to top

NEW and RECENT RESEARCH PAPERS

For a complete list of research papers click here

Coordinate-free stochastic differential equations as jets

Coordinate-free stochastic differential equations as jets

Optimal approximation of SDEs on submanifolds: the Ito-vector and Ito-jet projections

Optimal approximation of SDEs on submanifolds: the Ito-vector and Ito-jet projections

SDEs with uniform distributions: Peacocks, Conic martingales and mean reverting uniform diffusions

SDEs with uniform distributions: Peacocks, Conic martingales and mean reverting uniform diffusions

Projection based dimensionality reduction for measure valued evolution equations in statistical manifolds

Projection based dimensionality reduction for measure valued evolution equations in statistical manifolds

Optimal approximations of the Fokker-Planck-Kolmogorov equation: projection, maximum likelihood eigenfunctions and Galerkin methods

Optimal approximations of the Fokker-Planck-Kolmogorov equation: projection, maximum likelihood eigenfunctions and Galerkin methods

An indifference approach to the cost of capital constraints: KVA and beyond

An indifference approach to the cost of capital constraints: KVA and beyond

Static vs adapted optimal execution strategies in two benchmark trading models

Static vs adapted optimal execution strategies in two benchmark trading models

Disentangling wrong-way risk: pricing CVA via change of measures and drift adjustment

Disentangling wrong-way risk: pricing CVA via change of measures and drift adjustment

Impact of Multiple Curve Dynamics in Credit Valuation Adjustments under Collateralization

Impact of Multiple Curve Dynamics in Credit Valuation Adjustments under Collateralization

Invariance, existence and uniqueness of solutions of nonlinear valuation PDEs and FBSDEs inclusive of credit risk, collateral and funding costs

Invariance, existence and uniqueness of solutions of nonlinear valuation PDEs and FBSDEs inclusive of credit risk, collateral and funding costs

Funding, Repo and Credit Inclusion in Option Pricing via Dividends

Funding, Repo and Credit Inclusion in Option Pricing via Dividends

Nonlinear Valuation under Collateral, Credit Risk and Funding Costs: A Numerical Case Study Extending Black-Scholes

Nonlinear Valuation under Collateral, Credit Risk and Funding Costs: A Numerical Case Study Extending Black-Scholes

Multi Currency Credit Default Swaps: Quanto Effects and FX Devaluation Jumps

Multi Currency Credit Default Swaps: Quanto Effects and FX Devaluation Jumps

The Multivariate Mixture Dynamics Model: Shifted dynamics and correlation skew

The Multivariate Mixture Dynamics Model: Shifted dynamics and correlation skew

Impact of Robotics, RPA and AI on the insurance industry: challenges and opportunities

CCPs, Central Clearing, CSA, Credit Collateral and Funding Costs Valuation FAQ: Re-hypothecation, CVA, Closeout, Netting, WWR, Gap-Risk, Initial and Variation Margins, Multiple Discount Curves, FVA?

CCP Cleared or Bilateral CSA Trades with Initial/Variation Margins under credit, funding and wrong-way risks: A Unified Valuation Approach

Consistent iterated simulation of multi-variate default times: a Markovian indicators characterization

Stochastic filtering via L2 projection on mixture manifolds with computer algorithms and numerical examples

Interest-Rate Modelling in Collateralized Markets: Multiple curves, credit-liquidity effects, CCPs

Funding, Collateral and Hedging: Uncovering the Mechanics and the Subtleties of Funding Valuation Adjustments

CoCo Bonds Valuation with Equity- and

Credit-Calibrated First Passage Structural Models

Optimal Execution Comparison Across Risks and Dynamics, with Solutions for Displaced Diffusions

The arbitrage-free Multivariate Mixture Dynamics Model:

Consistent single-assets and index volatility smiles

Impact of Robotics, RPA and AI on the insurance industry: challenges and opportunities

CCPs, Central Clearing, CSA, Credit Collateral and Funding Costs Valuation FAQ: Re-hypothecation, CVA, Closeout, Netting, WWR, Gap-Risk, Initial and Variation Margins, Multiple Discount Curves, FVA?

CCP Cleared or Bilateral CSA Trades with Initial/Variation Margins under credit, funding and wrong-way risks: A Unified Valuation Approach

Consistent iterated simulation of multi-variate default times: a Markovian indicators characterization

Stochastic filtering via L2 projection on mixture manifolds with computer algorithms and numerical examples

Interest-Rate Modelling in Collateralized Markets: Multiple curves, credit-liquidity effects, CCPs

Funding, Collateral and Hedging: Uncovering the Mechanics and the Subtleties of Funding Valuation Adjustments

CoCo Bonds Valuation with Equity- and

Credit-Calibrated First Passage Structural Models

Optimal Execution Comparison Across Risks and Dynamics, with Solutions for Displaced Diffusions

The arbitrage-free Multivariate Mixture Dynamics Model:

Consistent single-assets and index volatility smiles

Back to top

ALL DOWNLOADABLE SCIENTIFIC / ACADEMIC RESEARCH PAPERS

Research papers in stochastic nonlinear filtering, probability, statistics,

information geometry, and in several areas of quantitative finance.

Most papers are either directly downloadable or downloadable via SSRN/arXiv

Back to top

Probability, Statistics, Nonlinear Filtering, Stochastic Differential and Information Geometry

Back to scientific/academic works

Back to top

Counterparty Credit Risk, Collateral, Funding, CVA/DVA/FVA, multiple curves.

- Counterparty Risk FAQ: Credit VaR, PFE, CVA, DVA, Closeout, Netting, Collateral, Re-hypothecation, WWR, Basel, Funding, CCDS and Margin Lending

Disentangling wrong-way risk: pricing CVA via change of measures and drift adjustment

Disentangling wrong-way risk: pricing CVA via change of measures and drift adjustment  Impact of Multiple Curve Dynamics in Credit Valuation Adjustments under Collateralization

Impact of Multiple Curve Dynamics in Credit Valuation Adjustments under Collateralization  Invariance, existence and uniqueness of solutions of nonlinear valuation PDEs and FBSDEs inclusive of credit risk, collateral and funding costs

Invariance, existence and uniqueness of solutions of nonlinear valuation PDEs and FBSDEs inclusive of credit risk, collateral and funding costs  Funding, Repo and Credit Inclusion in Option Pricing via Dividends

Funding, Repo and Credit Inclusion in Option Pricing via Dividends  Nonlinear Valuation under Collateral, Credit Risk and Funding Costs: A Numerical Case Study Extending Black-Scholes

Nonlinear Valuation under Collateral, Credit Risk and Funding Costs: A Numerical Case Study Extending Black-Scholes - CCPs, Central Clearing, CSA, Credit Collateral and Funding Costs Valuation FAQ: Re-hypothecation, CVA, Closeout, Netting, WWR, Gap-Risk, Initial and Variation Margins, Multiple Discount Curves, FVA?

- CCP Cleared or Bilateral CSA Trades with Initial/Variation Margins under credit, funding and wrong-way risks: A Unified Valuation Approach

- Interest-Rate Modelling in Collateralized Markets: Multiple curves, credit-liquidity effects, CCPs

-

Funding, Collateral and Hedging: Uncovering the Mechanics and the Subtleties of Funding Valuation Adjustments

- Funding Valuation Adjustment: A Consistent Framework Including CVA, DVA, Collateral, Netting Rules and Re-Hypothecation

An indifference approach to the cost of capital constraints: KVA and beyond

An indifference approach to the cost of capital constraints: KVA and beyond -

Illustrating a problem in the self-financing condition in two

2010-2011 papers on funding, collateral and discounting

- Restructuring Counterparty Credit Risk

- Collateral Margining in Arbitrage-Free Counterparty Valuation Adjustment

including Re-Hypotecation and Netting

- Dangers of Bilateral Counterparty Risk: the fundamental impact of closeout conventions

- Bilateral

counterparty risk valuation for interest-rate products: impact of volatilities and correlations

- Bilateral

Counterparty Risk Valuation with Stochastic Dynamical Models and Application

to Credit Default Swaps

- Counterparty

Risk Valuation for Energy-commodities Swaps: Impact of volatilities and

correlation

- Counterparty

Risk for Credit Default Swaps: Impact of spread volatility and default

correlation

- A Formula for Interest Rate Swaps

Valuation under Counterparty Risk in presence of Netting Agreements

- Risk Neutral Pricing of Counterparty Risk

- Counterparty risk and Contingent CDS

valuation under correlation between interest-rates and default

- Credit Default Swap Calibration and Equity Swap

Valuation under Counterparty Risk with a Tractable Structural Model

- Credit Default Swap Calibration

and Counterparty Risk Valuation with a Scenario based First Passage Model

-

Credit Calibration with Structural Models: The Lehman case and Equity Swaps under Counterparty Risk

Back to scientific/academic works

Back to top

Credit Derivatives Papers: CDS, CDS Options, CDS Liquidity, CDOs, etc.

Back to scientific/academic works

Back to top

Algorithmic trading and optimal execution

Back to scientific/academic works

Back to top

Volatility

smile modeling

Back to scientific/academic works

Back to top

Interest-rate

derivatives modeling, interest rate models with credit and liquidity effects, multiple curves

Back to scientific/academic works

Back to top

Basket

options

Back to scientific/academic works

Back to top

General

option-pricing theory

Back to scientific/academic works

Back to top

Risk

Management

Back to scientific/academic works

Back to top

Probability, Statistics, Nonlinear Filtering, Stochastic Differential and Information Geometry.

-

The direct L2 geometric structure on a manifold

of probability densities with applications to Filtering (by Damiano Brigo)

(Click here to download a PDF

file with the paper).

In this paper we introduce a projection method for the space of probability distributions based on the differential geometric approach to statistics. This method is based on a direct $L^2$ metric as opposed to the usual Hellinger distance and the related Fisher Information metric. We explain how this apparatus can be used for the nonlinear filtering problem, in relationship also to earlier projection methods based on the Fisher metric. Past projection filters focused on the Fisher metric and the exponential families that made the filter correction step exact. In this work we introduce the mixture projection filter, namely the projection filter based on the direct $L^2$ metric and based on a manifold given by a mixture of pre-assigned densities.

Back to

scientific/academic works Back to top

-

Stochastic filtering via L2 projection on mixture manifolds with computer algorithms and numerical examples

(by John Armstrong and Damiano Brigo)

Click here to download a PDF

file with the paper from arXiv.

We examine some differential geometric approaches to finding approximate solutions to the continuous time nonlinear filtering problem. Our primary focus is a projection method using the direct L2 metric onto a family of normal mixtures. We compare this method to earlier projection methods based on the Hellinger distance/Fisher metric and exponential families, and we compare the L2 mixture projection filter with a particle method with the same number of parameters. We study particular systems that may illustrate the advantages of this filter over other algorithms when comparing outputs with the optimal filter. We finally consider a specific software design that is suited for a numerically efficient implementation of this filter and provide numerical examples.

Back to

scientific/academic works Back to top

- A finite

dimensional filter with exponential conditional density (by Damiano

Brigo)

Part

of this paper has been published in "Statistics and Probability

Letters" 49 (2000), pp. 127-134. (Click here to download a PDF

file with the paper).

In this paper

we consider the continuous--time nonlinear filtering problem, which has

an infinite--dimensional solution in general, as proved by Chaleyat--Maurel and

Michel. There are few examples of nonlinear systems for which the optimal filter

is finite dimensional, in particular Kalman's, Benes', and Daum's filters. In

the present paper, we construct new classes of scalar nonlinear filtering

problems admitting finite--dimensional filters. We consider a given

(nonlinear) diffusion coefficient for the state equation, a given (nonlinear)

observation function, and a given finite--dimensional exponential family

of probability densities. We construct a drift for the state equation such that

the resulting nonlinear filtering problem admits a finite--dimensional filter

evolving in the prescribed exponential family augmented by the observaton

function and its square.

Back to

scientific/academic works Back to top

- Coordinate-free stochastic differential equations as jets (by John Armstrong and Damiano

Brigo)

(Click here to download a PDF

file with the paper).

We explain how Ito Stochastic Differential Equations on manifolds may be defined as 2-jets of curves and show how this relationship can be interpreted in terms of a convergent numerical scheme. We use jets as a natural language to express geometric properties of SDEs. We show how jets can lead to intuitive representations of It\^o SDEs, including three different types of drawings. We explain that the mainstream choice of Fisk-Stratonovich-McShane calculus for stochastic differential geometry is not necessary. We give a new geometric interpretation of the It\^o--Stratonovich transformation in terms of the 2-jets of curves induced by consecutive vector flows. We discuss the forward Kolmogorov equation and the backward diffusion operator in geometric terms. In the one-dimensional case we consider percentiles of the solutions of the SDE and their properties. This allows us to interpret the coefficients of SDEs in terms of "fan diagrams". In particular the median of a SDE solution is associated to the drift of the SDE in Stratonovich form for small times.

Back to

scientific/academic works Back to top

- Optimal approximation of SDEs on submanifolds: the Ito-vector and Ito-jet projections (by John Armstrong and Damiano

Brigo)

(Click here to download a PDF

file with the paper).

We define two new notions of projection of a stochastic differential equation (SDE) onto a submanifold: the Ito-vector and Ito-jet projections. This allows one to systematically develop low dimensional approximations to high dimensional SDEs using differential geometric techniques. The approach generalizes the notion of projecting a vector field onto a submanifold in order to derive approximations to ordinary differential equations, and improves the previous Stratonovich projection method by adding optimality analysis and results. Indeed, just as in the case of ordinary projection, our definitions of projection are based on optimality arguments and give in a well-defined sense "optimal" approximations to the original SDE. As an application we consider approximating the solution of the non-linear filtering problem with a Gaussian distribution and show how the newly introduced Ito projections lead to optimal approximations in the Gaussian family and briefly discuss the optimal approximation for more general families of distribution. We perform a numerical comparison of our optimally approximated filter with the classical Extended Kalman Filter to demonstrate the efficacy of the approach.

Back to

scientific/academic works Back to top

- SDEs with uniform distributions: Peacocks, Conic martingales and mean reverting uniform diffusions

(By Damiano Brigo, Monique Jeablanc and Frederic Vrins)

(Click here to download a PDF

file with the paper).

We introduce a way to design Stochastic Differential Equations of diffusion type admitting a unique strong solution distributed as a uniform law with conic time-boundaries. We connect this general result to some special cases that where previously found in the peacock processes literature, and with the square root of time boundary case in particular. We introduce a special case with linear time boundary. We further introduce general mean-reverting diffusion processes having a constant uniform law at all times. This may be used to model random probabilities, random recovery rates or random correlations. We study local time and activity of such processes and verify via an Euler scheme simulation that they have the desired uniform behaviour.

Back to

scientific/academic works Back to top

- Projecting

the Fokker-Planck equation onto a finite dimensional exponential family

(By Damiano Brigo and Giovanni Pistone)

Working

paper at a preliminary stage. (Click here to download a PDF file with the paper).

In the present paper

we discuss problems concerning evolutions of densities related to Ito

diffusions in the framework of the statistical exponential manifold. We develop

a rigorous approach to the problem, and we particularize it to the orthogonal

projection of the evolution of the density of a diffusion process onto a

finite dimensional exponential manifold. It has been shown by D. Brigo (1996)

that the projected evolution can always be interpreted as the evolution of the

density of a different diffusion process. We give also a compactness result

when the dimension of the exponential family increases, as a first step towards

a convergence result to be investigated in the future. The infinite

dimensional exponential manifold structure introduced by G. Pistone and C.

Sempi is used and some examples are given.

Back to

scientific/academic works Back to top

- Projection based dimensionality reduction for measure valued evolution equations in statistical manifolds

(By Damiano Brigo and Giovanni Pistone)

(Click here to download a PDF

file with the paper).

We propose a dimensionality reduction method for infinite-dimensional measure-valued evolution equations such as the Fokker-Planck partial differential equation or the Kushner-Stratonovich resp. Duncan-Mortensen-Zakai stochastic partial differential equations of nonlinear filtering, with potential applications to signal processing, quantitative finance, heat flows and quantum theory among many other areas. Our method is based on the projection coming from a duality argument built in the exponential statistical manifold structure developed by G. Pistone and co-authors. The choice of the finite dimensional manifold on which one should project the infinite dimensional equation is crucial, and we propose finite dimensional exponential and mixture families. This same problem had been studied, especially in the context of nonlinear filtering, by D. Brigo and co-authors but the L2 structure on the space of square roots of densities or of densities themselves was used, without taking an infinite dimensional manifold environment space for the equation to be projected. Here we re-examine such works from the exponential statistical manifold point of view, which allows for a deeper geometric understanding of the manifold structures at play. We also show that the projection in the exponential manifold structure is consistent with the Fisher Rao metric and, in case of finite dimensional exponential families, with the assumed density approximation. Further, we show that if the sufficient statistics of the finite dimensional exponential family are chosen among the eigenfunctions of the backward diffusion operator then the statistical-manifold or Fisher-Rao projection provides the maximum likelihood estimator for the Fokker Planck equation solution. We finally try to clarify how the finite dimensional and infinite dimensional terminology for exponential and mixture spaces are related.

Back to

scientific/academic works Back to top

- Optimal approximations of the Fokker-Planck-Kolmogorov equation: projection, maximum likelihood eigenfunctions and Galerkin methods (By Damiano Brigo and Giovanni Pistone)

(Click here to download a PDF

file with the paper).

We study optimal finite dimensional approximations of the generally infinite-dimensional Fokker-Planck-Kolmogorov (FPK) equation, finding the curve in a given finite-dimensional family that best approximates the exact solution evolution. For a first local approximation we assign a manifold structure to the family and a metric. We then project the vector field of the partial differential equation (PDE) onto the tangent space of the chosen family, thus obtaining an ordinary differential equation for the family parameter. A second global approximation will be based on projecting directly the exact solution from its infinite dimensional space to the chosen family using the nonlinear metric projection. This will result in matching expectations with respect to the exact and approximating densities for particular functions associated with the chosen family, but this will require knowledge of the exact solution of FPK. A first way around this is a localized version of the metric projection based on the assumed density approximation. While the localization will remove global optimality, we will show that the somewhat arbitrary assumed density approximation is equivalent to the mathematically rigorous vector field projection. More interestingly we study the case where the approximating family is defined based on a number of eigenfunctions of the exact equation. In this case we show that the local vector field projection provides also the globally optimal approximation in metric projection, and for some families this coincides with a Galerkin method.

Back to scientific/academic works

Back to top

- Filtering by

projection on the manifold of exponential densities (By Damiano Brigo)

PhD

Thesis, Free University of Amsterdam, 1996. Several parts of this document have

been published in journals such as "IEEE Transactions on Automatic

Control", "Systems and Control Letters", "Bernoulli". (Click here to download a

PDF file with the thesis, or click here to download a zipped PS file with the thesis from Francois Le Gland's page

at IRISA).

This is the synthesis

of three years of research work on the projection filter.

Back to scientific/academic works

Back to top

- New families of Copulas based on

periodic functions (by Aurelien Alfonsi and Damiano Brigo)

Updated version published in "Communications

in Statistics: Theory and Methods", Vol 34, issue 7, 2005. Cermics

report 2003-250. Click here

to download a PDF version of the related paper from the CERMICS web

site.

[This paper is listed also in the Mathematical

Finance papers area below]. Although there

exists a large variety of copula functions, only a few are practically

manageable, and often the choice in dependence modeling falls on the Gaussian

copula. Further, most copulas are exchangeable, thus implying symmetric

dependence. We introduce a way to construct copulas based on periodic

functions. We study the two-dimensional case based on one dependence parameter

and then provide a way to extend the construction to the n-dimensional

framework. We can thus construct families of copulas in dimension n and

parameterized by n - 1 parameters, implying possibly asymmetric relations. Such

“periodic” copulas can be simulated easily.

Back to scientific/academic works

Back to top

-

Consistent single- and multi-step sampling of multivariate arrival times: A characterization of self-chaining copulas

(by Damiano Brigo and Kyriakos Chourdakis)

Click here to

download a PDF version of the related paper from arXiv.org

[This paper is listed also in the Mathematical

Finance papers area below] This paper deals with dependence across marginally exponentially distributed arrival times, such as default times in financial modeling or inter-failure times in reliability theory. We explore the relationship between dependence and the possibility to sample final multivariate survival in a long time-interval as a sequence of iterations of local multivariate survivals along a partition of the total time interval. We find that this is possible under a form of multivariate lack of memory that is linked to a property of the survival times copula. This property defines a "self-chaining-copula", and we show that this coincides with the extreme value copulas characterization. The self-chaining condition is satisfied by the Gumbel-Hougaard copula, a full characterization of self chaining copulas in the Archimedean family, and by the Marshall-Olkin copula. The result has important practical implications for consistent single-step and multi-step simulation of multivariate arrival times in a way that does not destroy dependency through iterations, as happens when inconsistently iterating a Gaussian copula.

Back to scientific/academic works

Back to top

Counterparty Credit Risk, Collateral and Funding;

CVA / DVA / FVA. Credit Derivatives Modeling.

- Counterparty Risk FAQ: Credit VaR, PFE, CVA, DVA, Closeout, Netting, Collateral, Re-hypothecation, WWR, Basel, Funding, CCDS and Margin Lending

(by Damiano Brigo)

Click here to download a PDF

version of this paper from arXiv, or download it from SSRN here, or directly here here

We present a dialogue on Counterparty Credit Risk touching on Credit Value at Risk (Credit VaR), Potential Future Exposure (PFE), Expected Exposure (EE), Expected Positive Exposure (EPE), Credit Valuation Adjustment (CVA), Debit Valuation Adjustment (DVA), DVA Hedging, Closeout conventions, Netting clauses, Collateral modeling, Gap Risk, Re-hypothecation, Wrong Way Risk, Basel III, inclusion of Funding costs, First to Default risk, Contingent Credit Default Swaps (CCDS) and CVA restructuring possibilities through margin lending. The dialogue is in the form of a Q&A between a CVA expert and a newly hired colleague.

Back to scientific/academic works

Back to top

-

CCPs, Central Clearing, CSA, Credit Collateral and Funding Costs Valuation FAQ: Re-hypothecation, CVA, Closeout, Netting, WWR, Gap-Risk, Initial and Variation Margins, Multiple Discount Curves, FVA? (by Damiano Brigo and Andrea Pallavicini)

Click here to download a PDF

version of this paper from SSRN. The paper is available also on arXiv.org here

We present a dialogue on Funding Costs and Counterparty Credit Risk modeling, inclusive of collateral, wrong way risk, gap risk and possible Central Clearing implementation through CCPs. This framework is important following the fact that derivatives valuation and risk analysis has moved from exotic derivatives managed on simple single asset classes to simple derivatives embedding the new or previously neglected types of complex and interconnected nonlinear risks we address here. This dialogue is the continuation of the "Counterparty Risk, Collateral and Funding FAQ" by Brigo (2011). In this dialogue we focus more on funding costs for the hedging strategy of a portfolio of trades, on the non-linearities emerging from assuming borrowing and lending rates to be different, on the resulting aggregation-dependent valuation process and its operational challenges, on the implications of the onset of central clearing, on the macro and micro effects on valuation and risk of the onset of CCPs, on initial and variation margins impact on valuation, and on multiple discount curves. Through questions and answers (Q&A) between a senior expert and a junior colleague, and by referring to the growing body of literature on the subject, we present a unified view of valuation (and risk) that takes all such aspects into account.

Back to scientific/academic works

Back to top

-

Disentangling wrong-way risk: pricing CVA via change of measures and drift adjustment

(by Damiano Brigo and Frederic Vrins)

Click here to download a PDF

version of this paper from arXiv.

A key driver of Credit Value Adjustment (CVA) is the possible dependency between exposure and counterparty credit risk, known as Wrong-Way Risk (WWR). At this time, addressing WWR in a both sound and tractable way remains challenging: arbitrage-free setups have been proposed by academic research through dynamic models but are computationally intensive and hard to use in practice. Tractable alternatives based on resampling techniques have been proposed by the industry, but they lack mathematical foundations. This probably explains why WWR is not explicitly handled in the Basel III regulatory framework in spite of its acknowledged importance. The purpose of this paper is to propose a new method consisting of an appealing compromise: we start from a stochastic intensity approach and end up with a pricing problem where WWR does not enter the picture explicitly. This result is achieved thanks to a set of changes of measure: the WWR effect is now embedded in the drift of the exposure, and this adjustment can be approximated by a deterministic function without affecting the level of accuracy typically required for CVA figures. The performances of our approach are illustrated through an extensive comparison of Expected Positive Exposure (EPE) profiles and CVA figures produced either by (i) the standard method relying on a full bivariate Monte Carlo framework and (ii) our drift-adjustment approximation. Given the uncertainty inherent to CVA, the proposed method is believed to provide a promising way to handle WWR in a sound and tractable way.

Back to scientific/academic works

Back to top

-

CCP Cleared or Bilateral CSA Trades with Initial/Variation Margins under credit, funding and wrong-way risks: A Unified Valuation Approach (by Damiano Brigo and Andrea Pallavicini)

Click here to download a PDF

version of this paper from SSRN. The paper is available also on arXiv.org here

The introduction of CCPs in most derivative transactions will dramatically change the landscape of derivatives pricing, hedging and risk management, and, according to the TABB group, will lead to an overall liquidity impact about 2 USD trillions. In this article we develop for the first time a comprehensive approach for pricing under CCP clearing, including variation and initial margins, gap credit risk and collateralization, showing concrete examples for interest rate swaps. Mathematically, the inclusion of asymmetric borrowing and lending rates in the hedge of a claim lead to nonlinearities showing up in claim dependent pricing measures, aggregation dependent prices, nonlinear PDEs and BSDEs. This still holds in presence of CCPs and CSA. We introduce a modeling approach that allows us to enforce rigorous separation of the interconnected nonlinear risks into different valuation adjustments where the key pricing nonlinearities are confined to a funding costs component that is analyzed through numerical schemes for BSDEs. We present a numerical case study for Interest Rate Swaps that highlights the relative size of the different valuation adjustments and the quantitative role of initial and variation margins, of liquidity bases, of credit risk, of the margin period of risk and of wrong way risk correlations.

Back to scientific/academic works

Back to top

- Funding, Repo and Credit Inclusion in Option Pricing via Dividends

(by Damiano Brigo, Cristin Buescu, Marek Rutkowski)

Click here to download a PDF

version of this paper from SSRN. The paper is available also on arXiv.org here

This paper specializes a number of earlier contributions to the theory of valuation of financial products in presence of credit risk, repurchase agreements and funding costs. Earlier works, including our own, pointed to the need of tools such as Backward Stochastic Differential Equations (BSDEs) or semi-linear Partial Differential Equations (PDEs), which in practice translate to ad-hoc numerical methods that are time-consuming and which render the full valuation and risk analysis difficult. We specialize here the valuation framework to benchmark derivatives and we show that, under a number of simplifying assumptions, the valuation paradigm can be recast as a Black-Scholes model with dividends. In turn, this allows for a detailed valuation analysis, stress testing and risk analysis via sensitivities. We refer to the full paper for a more complete mathematical treatment.

Back to scientific/academic works

Back to top

- Invariance, existence and uniqueness of solutions of nonlinear valuation PDEs and FBSDEs inclusive of credit risk, collateral and funding costs

(by Damiano Brigo, Marco Francischello, Andrea Pallavicini)

Click here to download a PDF

version of this paper from SSRN. The paper is available also on arXiv.org here

We study conditions for existence, uniqueness and invariance of the comprehensive nonlinear valuation equations first introduced in Pallavicini et al (2011). These equations take the form of semilinear PDEs and Forward-Backward Stochastic Differential Equations (FBSDEs). After summarizing the cash flows definitions allowing us to extend valuation to credit risk and default closeout, including collateral margining with possible re-hypothecation, and treasury funding costs, we show how such cash flows, when present-valued in an arbitrage free setting, lead to semi-linear PDEs or more generally to FBSDEs. We provide conditions for existence and uniqueness of such solutions in a viscosity and classical sense, discussing the role of the hedging strategy. We show an invariance theorem stating that even though we start from a risk-neutral valuation approach based on a locally risk-free bank account growing at a risk-free rate, our final valuation equations do not depend on the risk free rate. Indeed, our final semilinear PDE or FBSDEs and their classical or viscosity solutions depend only on contractual, market or treasury rates and we do not need to proxy the risk free rate with a real market rate, since it acts as an instrumental variable. The equations derivations, their numerical solutions, the related XVA valuation adjustments with their overlap, and the invariance result had been analyzed numerically and extended to central clearing and multiple discount curves in a number of previous works, including Pallavicini et al (2011), Pallavicini et al (2012), Brigo et al (2013), Brigo and Pallavicini (2014), and Brigo et al (2014).

Back to scientific/academic works

Back to top

- Nonlinear Valuation under Collateral, Credit Risk and Funding Costs: A Numerical Case Study Extending Black-Scholes

(by Damiano Brigo, Qing Daphne Liu, Andrea Pallavicini, David Sloth)

Click here to download a PDF

version of this paper from SSRN. The paper is available also on arXiv.org here

We develop an arbitrage-free framework for consistent valuation of derivative trades with collateralization, counterparty credit gap risk, and funding costs, following the approach first proposed by Pallavicini and co-authors in 2011. Based on the risk-neutral pricing principle, we derive a general pricing equation where Credit, Debit, Liquidity and Funding Valuation Adjustments (CVA, DVA, LVA and FVA) are introduced by simply modifying the payout cash-flows of the deal. Funding costs and specific close-out procedures at default break the bilateral nature of the deal price and render the valuation problem a non-linear and recursive one. CVA and FVA are in general not really additive adjustments, and the risk for double counting is concrete. We introduce a new adjustment, called a Non-linearity Valuation Adjustment (NVA), to address double-counting. Our framework is based on real market rates, since the theoretical risk free rate disappears from our final equations. The framework addresses common market practices of ISDA governed deals without restrictive assumptions on collateral margin payments and close-out netting rules, and can be tailored also to CCP trading under initial and variation margins, as explained in detail in Brigo and Pallavicini (2014). In particular, we allow for asymmetric collateral and funding rates, replacement close-out and re-hypothecation. The valuation equation takes the form of a backward stochastic differential equation or semi-linear partial differential equation, and can be cast as a set of iterative equations that can be solved by least-squares Monte Carlo. We propose such a simulation algorithm in a case study involving a generalization of the benchmark model of Black and Scholes for option pricing. Our numerical results confirm that funding risk has a non-trivial impact on the deal price, and that double counting matters too. We conclude the article with an analysis of large scale implications of non-linearity of the pricing equations: non-separability of risks, aggregation dependence in valuation, and local pricing measures as opposed to universal ones. This prompts a debate and a comparison between the notions of price and value, and will impact the operational structure of banks. This paper is an evolution, in particular, of the work by allavicini et al. (2011, 2012), Pallavicini and Brigo (2013), and Sloth (2013).

Back to scientific/academic works

Back to top

- Impact of Multiple Curve Dynamics in Credit Valuation Adjustments under Collateralization

(by Andrea Bormetti, Damiano Brigo, Marco Francischello, Andrea Pallavicini)

Click here to download a PDF

version of this paper from SSRN. The paper is available also on arXiv.org here

We present a detailed analysis of interest rate derivatives valuation under credit risk and collateral modeling. We show how the credit and collateral extended valuation framework in Pallavicini et al (2011), and the related collateralized valuation measure, can be helpful in defining the key market rates underlying the multiple interest rate curves that characterize current interest rate markets. A key point is that spot Libor rates are to be treated as market primitives rather than being defined by no-arbitrage relationships. We formulate a consistent realistic dynamics for the different rates emerging from our analysis and compare the resulting model performances to simpler models used in the industry. We include the often neglected margin period of risk, showing how this feature may increase the impact of different rates dynamics on valuation. We point out limitations of multiple curve models with deterministic basis considering valuation of particularly sensitive products such as basis swaps. We stress that a proper wrong way risk analysis for such products requires a model with a stochastic basis and we show numerical results confirming this fact.

Back to scientific/academic works

Back to top

- Funding, Collateral and Hedging: Uncovering the Mechanics and the Subtleties of Funding Valuation Adjustments

(by Andrea Pallavicini, Daniele Perini and Damiano Brigo)

Click here to download a PDF

version of this paper from SSRN. The paper is available also on arXiv.org here

The main result of this paper is a collateralized counterparty valuation adjusted pricing equation, which allows to price a deal while taking into account credit and debit valuation adjustments (CVA, DVA) along with margining and funding costs, all in a consistent way. Funding risk breaks the bilateral nature of the valuation formula. We find that the equation has a recursive form, making the introduction of a purely additive funding valuation adjustment (FVA) difficult. Yet, we can cast the pricing equation into a set of iterative relationships which can be solved by means of standard least-square Monte Carlo techniques. As a consequence, we find that identifying funding costs and debit valuation adjustments is not tenable in general, contrary to what has been suggested in the literature in simple cases. The assumptions under which funding costs vanish are a very special case of the more general theory. We define a comprehensive framework that allows us to derive earlier results on funding or counterparty risk as a special case, although our framework is more than the sum of such special cases. We derive the general pricing equation by resorting to a risk-neutral approach where the new types of risks are included by modifying the payout cash flows. We consider realistic settings and include in our models the common market practices suggested by ISDA documentation, without assuming restrictive constraints on margining procedures and close-out netting rules. In particular, we allow for asymmetric collateral and funding rates, and exogenous liquidity policies and hedging strategies. Re-hypothecation liquidity risk and close-out amount evaluation issues are also covered. Finally, relevant examples of non-trivial settings illustrate how to derive known facts about discounting curves from a robust general framework and without resorting to ad hoc hypotheses.

Back to scientific/academic works

Back to top

- Funding Valuation Adjustment: A Consistent Framework Including CVA, DVA, Collateral, Netting Rules and Re-Hypothecation

(by Andrea Pallavicini, Daniele Perini and Damiano Brigo)

Click here to download a PDF

version of this paper. The paper is available also on ssrn.com and arXiv.org

In this paper we describe how to include funding and margining costs into a risk-neutral pricing framework for counter-party credit risk. We consider realistic settings and we include in our models the common market practices suggested by the ISDA documentation without assuming restrictive constraints on margining procedures and close-out netting rules. In particular, we allow for asymmetric collateral and funding rates, and exogenous liquidity policies and hedging strategies. Re-hypothecation liquidity risk and close-out amount evaluation issues are also covered. We define a comprehensive pricing framework which allows us to derive earlier results funding or counter-party risk. Some relevant examples illustrate the non trivial settings needed to derive known facts about discounting curves by starting from a general framework and without resorting to ad hoc hypotheses. Our main result is a bilateral collateralized counter-party valuation adjusted pricing equation, which allows to price a deal while taking into account credit and debt valuation adjustments along with margining and funding costs in a coherent way. We find that the equation has a recursive form, making the introduction of an additive funding valuation adjustment difficult. Yet, we can cast the pricing equation into a set of iterative relationships which can be solved by means of standard least-square Monte Carlo techniques.

Back to scientific/academic works

Back to top

- An indifference approach to the cost of capital constraints: KVA and beyond

(by Damiano Brigo, Marco Francischello and Andrea Pallavicini)

Click here to download a PDF

version of this paper. The paper is available also on ssrn.com

In this paper we describe how to include funding and margining costs into a risk-neutral pricing framework for counter-party credit risk. We consider realistic settings and we include in our models the common market practices suggested by the ISDA documentation without assuming restrictive constraints on margining procedures and close-out netting rules. In particular, we allow for asymmetric collateral and funding rates, and exogenous liquidity policies and hedging strategies. Re-hypothecation liquidity risk and close-out amount evaluation issues are also covered. We define a comprehensive pricing framework which allows us to derive earlier results funding or counter-party risk. Some relevant examples illustrate the non trivial settings needed to derive known facts about discounting curves by starting from a general framework and without resorting to ad hoc hypotheses. Our main result is a bilateral collateralized counter-party valuation adjusted pricing equation, which allows to price a deal while taking into account credit and debt valuation adjustments along with margining and funding costs in a coherent way. We find that the equation has a recursive form, making the introduction of an additive funding valuation adjustment difficult. Yet, we can cast the pricing equation into a set of iterative relationships which can be solved by means of standard least-square Monte Carlo techniques.

Back to scientific/academic works

Back to top

- Restructuring Counterparty Credit Risk

(by Claudio Albanese, Damiano Brigo and Frank Oertel)

Click here to download a PDF

version of this paper This paper has been submitted to the Bundesbank paper research series.

We introduce an innovative theoretical framework for the valuation and replication

of derivative transactions between defaultable entities based on the principle of arbitrage free-

dom. Our framework extends the traditional formulations based on Credit and Debit Valuation

Adjustments (CVA and DVA).

Depending on how the default contingency is accounted for, we list a total of ten di

er-

ent structuring styles. These include bi-partite structures between a bank and a counterparty,

tri-partite structures with one margin lender in addition, quadri-partite structures with two mar-

gin lenders and, most importantly, con�gurations where all derivative transactions are cleared

through a Central Counterparty Clearing House (CCP).

We compare the various structuring styles under a number of criteria including consistency

from an accounting standpoint, counterparty risk hedgeability, numerical complexity, transaction

portability upon default, induced behaviour and macro-economic impact of the implied wealth

allocation.

Back to scientific/academic works

Back to top

- Illustrating a problem in the self-financing condition in two 2010-2011 papers on funding, collateral and discounting

(by Damiano Brigo, Cristin Buescu, Andrea Pallavicini and Qing Liu)

Click here to download a PDF

version of this paper.

We illustrate a problem in the self-financing condition used in the papers "Funding beyond discounting: collateral agreements and derivatives pricing" (Risk Magazine, February 2010) and "Partial Differential Equation Representations of Derivatives with Counterparty Risk and Funding Costs" (The Journal of Credit Risk, 2011). These papers state an erroneous self-financing condition. In the first paper, this is equivalent to assuming that the equity position is self-financing on its own and without including the cash position. In the second paper, this is equivalent to assuming that a subportfolio is self-financing on its own, rather than the whole portfolio. The error in the first paper is avoided when clearly distinguishing between price processes, dividend processes and gain processes. We present an outline of the derivation that yields the correct statement of the self-financing condition, clarifying the structure of the relevant funding accounts, and show that the final result in "Funding beyond discounting" is correct, even if the self-financing condition stated is not.

Back to scientific/academic works

Back to top

- Multi Currency Credit Default Swaps Quanto effects and FX devaluation jumps

(by Damiano Brigo, Nicola Pede, Andrea Petrelli)

Click here to download a PDF

version of this paper from arXiv, or download it from SSRN here

Credit Default Swaps (CDS) on a reference entity may be traded in multiple currencies, in that protection upon default may be offered either in the domestic currency where the entity resides, or in a more liquid and global foreign currency. In this situation currency fluctuations clearly introduce a source of risk on CDS spreads. For emerging markets, but in some cases even in well developed markets, the risk of dramatic Foreign Exchange (FX) rate devaluation in conjunction with default events is relevant. We address this issue by proposing and implementing a model that considers the risk of foreign currency devaluation that is synchronous with default of the reference entity. As a fundamental example we consider the sovereign CDSs on Italy, quoted both in EUR and USD.

Preliminary results indicate that perceived risks of devaluation can induce a significant basis across domestic and foreign CDS quotes. For the Republic of Italy, a USD CDS spread quote of 440 bps can translate into a EUR quote of 350 bps in the middle of the Euro-debt crisis in the first week of May 2012. More recently, from June 2013, the basis spreads between the EUR quotes and the USD quotes are in the range around 40 bps.

We explain in detail the sources for such discrepancies. Our modeling approach is based on the reduced form framework for credit risk, where the default time is modeled in a Cox process setting with explicit diffusion dynamics for default intensity/hazard rate and exponential jump to default. For the FX part, we include an explicit default-driven jump in the FX dynamics. As our results show, such a mechanism provides a further and more effective way to model credit/FX dependency than the instantaneous correlation that can be imposed among the driving Brownian motions of default intensity and FX rates, as it is not possible to explain the observed basis spreads during the Euro-debt crisis by using the latter mechanism alone.

Back to scientific/academic works

Back to top

- Credit Default Swaps Liquidity modeling: A survey

(by Damiano Brigo, Mirela Predescu and Agostino Capponi)

Click here to download a PDF

version of this paper from arXiv, or download it from SSRN here

We review different approaches for measuring the impact of liquidity on CDS prices. We start with reduced form models incorporating liquidity as an additional discount rate. We review Chen, Fabozzi and Sverdlove (2008) and Buhler and Trapp (2006, 2008), adopting different assumptions on how liquidity rates enter the CDS premium rate formula, about the dynamics of liquidity rate processes and about the credit-liquidity correlation. Buhler and Trapp (2008) provides the most general and realistic framework, incorporating correlation between liquidity and credit, liquidity spillover effects between bonds and CDS contracts and asymmetric liquidity effects on the Bid and Ask CDS premium rates. We then discuss the Bongaerts, De Jong and Driessen (2009) study which derives an equilibrium asset pricing model incorporating liquidity effects. Findings include that both expected illiquidity and liquidity risk have a statistically significant impact on expected CDS returns. We finalize our review with a discussion of Predescu et al (2009), which analyzes also data in-crisis. This is a statistical model that associates an ordinal liquidity score with each CDS reference entity and allows one to compare liquidity of over 2400 reference entities. This study points out that credit and illiquidity are correlated, with a smile pattern. All these studies highlight that CDS premium rates are not pure measures of credit risk. Further research is needed to measure liquidity premium at CDS contract level and to disentangle liquidity from credit effectively

Back to scientific/academic works

Back to top

- CoCo Bonds Valuation with Equity- and Credit-Calibrated First Passage Structural Models

(by Damiano Brigo, Joao Garcial and Nicola Pede)

Click here to download a PDF

version of this paper from SSRN, or download it from arXiv here

After the beginning of the credit and liquidity crisis, financial institutions have been considering creating a convertible-bond type contract focusing on Capital. Under the terms of this contract, a bond is converted into equity if the authorities deem the institution to be under-capitalized. This paper discusses this Contingent Capital (or Coco) bond instrument and presents a pricing methodology based on firm value models. The model is calibrated to readily available market data. A stress test of model parameters is illustrated to account for potential model risk. Finally, a brief overview of how the instrument performs is presented.

Back to scientific/academic works

Back to top

- Arbitrage-free

Pricing of Credit Index Options: The no-armageddon pricing measure and the

role of correlation after the subprime crisis (by Massimo Morini and

Damiano Brigo)

Click here to download a PDF

version of this paper, or download it from the SSRN web site here.

In

this work we consider three problems of the standard market approach to pricing

of credit index options: the definition of the index spread is not valid in

general, the usually considered payoff leads to a pricing which is not always

defined, and the candidate numeraire one would use to define a pricing measure

is not strictly positive, which would lead to a non-equivalent pricing measure.

We give a general mathematical solution to the three problems, based on a novel

way of modeling the flow of information through the definition of a new

subfiltration. Using this subfiltration, we take into account consistently the

possibility of default of all names in the portfolio, that is neglected in the

standard market approach. We show that, while the related mispricing can be

negligible for standard options in normal market conditions, it can become

highly relevant for different options or in stressed market conditions.

In particular, we show on 2007 market data that after the subprime credit

crisis the mispricing of the market formula compared to the no arbitrage

formula we propose has become financially relevant even for the liquid

Crossover Index Options.

Back to scientific/academic works

Back to top

- Credit models and the crisis,

or: How I learned to stop worrying and love the CDOs (by Damiano Brigo, Andrea Pallavicini and Roberto Torresetti).

A vastly extended and updated version of this paper will appear as a book:

"Credit models and the crisis: a journey into CDOs, copulas, correlations

and dynamic models", Wiley, Chichester, 2010

Click here (SSRN) or here (arXiv) to download a PDF version of this paper.

We follow a long path for Credit Derivatives and Collateralized Debt Obligations (CDOs) in particular, from the introduction of the Gaussian copula model and the related implied correlations to the introduction of arbitrage-free dynamic loss models capable of calibrating all the tranches for all the maturities at the same time. En passant, we also illustrate the implied copula, a method that can consistently account for CDOs with different attachment and detachment points but not for different maturities. The discussion is abundantly supported by market examples through history. The dangers and critics we present to the use of the Gaussian copula and of implied correlation had all been published by us, among others, in 2006, showing that the quantitative community was aware of the model limitations before the crisis. We also explain why the Gaussian copula model is still used in its base correlation formulation, although under some possible extensions such as random recovery. Overall we conclude that the modeling effort in this area of the derivatives market is unfinished, partly for the lack of an operationally attractive single-name consistent dynamic loss model, and partly because of the diminished investment in this research area.

Back to scientific/academic works

Back to top

- Default

correlation, cluster dynamics and single names: The GPCL dynamical loss

model (by Damiano Brigo, Andrea Pallavicini and Roberto Torresetti)

Click here to download a PDF version of this paper, or

download it from the SSRN web site here.

We extend the common

Poisson shock framework reviewed for example in Lindskog and McNeil (2003) to a

formulation avoiding repeated defaults, thus obtaining a model that can account

consistently for single name default dynamics, cluster default dynamics and

default counting process. This approach allows one to introduce significant

dynamics, improving on the standard ``bottom-up" approaches, and to

achieve true consistency with single names, improving on most ``top-down"

loss models. Furthermore, the resulting GPCL model has important links with the

previous GPL dynamical loss model in Brigo, Pallavicini and Torresetti

(2006a,b), which we point out. Model extensions allowing for more articulated

spread and recovery dynamics are hinted at. Calibration to both DJi-TRAXX and

CDX index and tranche data across attachments and maturities shows that the

GPCL model has the same calibration power as the GPL model while allowing for

consistency with single names.

Back to scientific/academic works

Back to top

- Calibration

of CDO Tranches with the dynamical Generalized-Poisson loss model (by

Damiano Brigo, Andrea Pallavicini and Roberto Torresetti)

Click here

to download a PDF version of this paper, or download it from the SSRN web site here.

In the first part we

consider a dynamical model for the number of defaults of a pool of names. The

model is based on the notion of generalized Poisson process, allowing for more

than one default in small time intervals, contrary to many alternative

approaches to loss modeling. We illustrate how to define the pool default

intensity and discuss recovery assumptions. The models are tractable, pricing

and simulation are straightforward, and consistent calibration to quoted index

CDO tranches and tranchelets for several maturities is feasible, as we

illustrate with numerical examples. In the second part we model directly the

pool loss and we introduce extensions based on piecewise gamma, scenario-based

or CIR random intensities, leading to richer spread dynamics, investigating

calibration improvements and stability.

Back to scientific/academic works

Back to top

- Risk Neutral versus Objective Loss Distribution and

CDO Tranches Valuation (by

Damiano Brigo, Andrea Pallavicini and Roberto Torresetti)

Click here to

directly download a PDF version of this paper or here to

see it from the SSRN web site.

We consider the risk

neutral loss distribution as implied by index CDO tranche quotes through a

“scenario default rate” model as opposed to the objective measure

loss distribution based on historical analysis. The risk neutral loss

distribution turns out to privilege large realizations of the loss with respect

to the objective distribution, thus implying the well known presence of a risk

premium. We quantify this difference numerically by pricing CDO tranches and

indices under the two distributions. En passant we analyze the implied risk

neutral default rate distributions calibrated from April-2004 throughout

April-2006, pointing out its distinctive “bump feature” in the

tail.

Back to scientific/academic works

Back to top

- Implied Expected Tranched Loss Surface from CDO Data (by Damiano Brigo, Andrea Pallavicini and Roberto

Torresetti)

Click here to download a PDF version of this paper, or

download it from the ssrn web

site.

We explain how the

payoffs of credit indices and tranches are valued in terms of expected tranched

losses (ETL). ETL are natural quantities to imply from market data.

No-arbitrage constraints on ETL's as attachment points and maturities change

are introduced briefy. As an alternative to the temporally inconsistent notion

of implied correlation we consider the ETL surface, built directly from market

quotes given minimal interpolation assumptions. We check that the kind of

interpolation does not interfere excessively. Instruments bid/asks enter our

analysis, contrary to Walker's (2006) earlier work on the ETL implied surface.

By doing so we find less and very few violations of the no-arbitrage

conditions. The ETL implied surface can be used to value tranches with

nonstandard attachments and maturities as an alternative to implied

correlation.

Back to scientific/academic works

Back to top

- Implied Correlation in CDO Tranches: A Paradigm To Be

Handled with Care (by

Damiano Brigo, Andrea Pallavicini and Roberto Torresetti)

Click here to download a PDF version of this

paper, or download it from the ssrn web

site.

We illustrate the two

main types of implied correlation one may obtain from market CDO tranche

spreads. Compound correlation is more consistent at single tranche level but

for some market CDO tranche spreads cannot be implied. Base correlation is less

consistent but more flexible and can be implied for a much wider set of CDO

tranche market spreads. Furthermore, base correlation is more easily

interpolated and leads to the possibility to price non-standard detachments.

Even so, Base correlation may lead to negative expected tranche losses, thus

violating basic no-arbitrage conditions. We illustrate these features with

numerical examples.

Back to scientific/academic works

Back to top

- Charting a Course Through the CDS Big Bang (by Johan Beumee, Damiano Brigo, Daniel Schiemert and Gareth Stoyle)

Click here to download a PDF version of this

paper, or download it from the ssrn web

site.

Following the recent introduction of new forms of Credit Default Swap (CDS) contracts expressed as upfront payments plus a fixed coupon, this note examines the methodology suggested by Barclays Capital, Goldman Sachs, JPMorgan, Markit (BGJM)/ISDA (2009), for conversion of CDS quotes between upfront and running. The proposed flat hazard rate (FHR) conversion method is to be understood as a rule-of-thumb single-contract quoting mechanism rather than as a modelling device. For example, an hypothetical investor who would put the FHR converted running spreads into her old running CDS library would strip wrong hazard rates, inconsistent with those coming directly from the quoted term structure of upfronts.

This new methodology appears mostly as a device to transit the market towards adoption of the new upfront CDS as direct trading products while maintaining a semblance of running quotes for investors who may be suffering the transition. We caution though that

i) the conversion done with proper hazard rates consistent across term would produce different results;

ii) the quantities involved in the conversion should not be used as modelling tools anywhere; and

iii) for highly distressed names with a high upfront paid by the protection buyer, the conversion to running spreads fails unless, as we propose, a third recovery scenario of 0% is added to the suggested 20% and 40%.

This paper is not meant as a criticism of the proposed standardization of the conversion method but as a warning on the confusion this may generate when the method is not used carefully.

Back to scientific/academic works

Back to top

- Credit

Default Swaps Calibration and Option Pricing with the SSRD Stochastic

Intensity and Interest-Rate Model (by Damiano Brigo and Aurelien Alfonsi) (earlier title: A two-dimensional shifted

square-root diffusion model for credit derivatives: calibration, pricing

and the impact of correlation)

Paper

presented at the conference RISK EUROPE, April 8-9, 2003, Paris, and at the

6-th Columbia=JAFEE International Conference, Tokyo, March 15-16, 2003. Reduced

version in Finance and Stochastics. Click here to download a PDF version of this paper.

In the present paper

we introduce a two-dimensional shifted square-root diffusion (SSRD) model for

interest rate derivatives and single-name credit derivatives, in a stochastic

intensity framework. The SSRD is the unique model, to the best of our

knowledge, allowing for an automatic calibration of the term structure of

interest rates and of credit default swaps (CDS's). Moreover, the model retains

free dynamics parameters that can be used to calibrate option data, such as

caps for the interest rate market and options on CDS's in the credit market.

The calibrations to the interest-rate market and to the credit market can be

kept separate, thus realizing a superposition that is of practical value.

We discuss the impact of interest-rate and

default-intensity correlation on calibration and pricing, and test it by means

of Monte Carlo simulation. We use a variant of Jamshidian's decomposition to

derive an analytical formula for CDS options under CIR++ stochastic intensity.

Finally, we develop an analytical approximation based on a Gaussian dependence

mapping for some basic credit derivatives terms involving correlated CIR processes.

Back to scientific/academic works

Back to top

- A Comparison

between the SSRD Model and the Market Model for CDS Options Pricing (by

Damiano Brigo and Laurent

Cousot)

Presented at the Third Bachelier Conference on

Mathematical Finance, Chicago, 2004. Click here to

download a PDF file containing this paper, from Laurent Cousot's web site at

NYU. Updated version to appear on the "International Journal of Theoretical and Applied

Finance".

In this paper we investigate

implied volatility patterns in the Shifted Square Root Diffusion (SSRD) model

as functions of the model parameters. We begin by recalling the Credit Default

Swap (CDS) options market model that is consistent with a market Black-like

formula, thus introducing a notion of implied volatility for CDS options. We

examine implied volatilies coming from SSRD prices and characterize the

qualitative behavior of implied volatilities as functions of the SSRD model

parameters. We introduce an analytical approximation for the SSRD implied

volatility that follows the same patterns in the model parameters and that can

be used to have a first rough estimate of the implied volatility following a

calibration. We compute numerically the CDS-rate volatility smile for the

adopted SSRD model. We find a decreasing pattern of SSRD implied volatilities

in the interest-rate/intensity correlation. We check whether it is possible to

assume zero correlation after the option maturity in computing the option price

and provide an upper bound for the Monte Carlo standard error in cases where

this is not possible.

Back to scientific/academic works

Back to top

- A Dynamic Programming Approach for Pricing CDS and CDS

Options (By Hatem Ben Ameur, Damiano Brigo and Eymen Errais).

Updated version published in Quantitative Finance. Click here to download a copy from Eymen Errais' web site at Stanford.

We

propose a general setting for pricing single-name knock-out credit derivatives.

Examples include Credit Default Swaps (CDS), European and Bermudan CDS options.

The default of the underlying reference entity is modeled within a doubly

stochastic framework where the default intensity follows a CIR++ process. We

estimate the model parameters through a combination of a cross sectional

calibration based method and a historical estimation approach. We propose a

numerical procedure based on dynamic programming and a piecewise linear

approximation to price American-style knock-out credit options. Our numerical

investigation shows consistency, convergence and efficiency. We find that

American-style CDS options can complete the credit derivatives market by

allowing the investor to focus on spread movements rather than on the default event.

Back to scientific/academic works

Back to top

- Credit

Derivatives Pricing with a Smile-Extended Jump Stochastic Intensity Model

(by Damiano Brigo and Naoufel El-Bachir)

Click here to download

a PDF version of this paper from the ICMA Centre.

We present a

two-factor stochastic default intensity and interest rate model for pricing

single-name default swaptions. The specific positive square root processes

considered fall in the relatively tractable class of affine jump diffusions

while allowing for inclusion of stochastic volatility and jumps in default swap

spreads. Separable calibration to interest-rate and credit products is feasible,

as we illustrate with examples on the basic model and its variants.

Numerical experiments show that the calibrated model can generate plausible

volatility smiles. Hence, the model can be calibrated to a default swap term

structure and few default swaptions, and the calibrated parameters can be used

to value consistently other default swaptions (different strikes and

maturities, or more complex structures) on the same credit reference name.

Back to scientific/academic works

Back to top

- An Exact

Formula for Default Swaptions Pricing in the SSRJD Stochastic Intensity

Model (by Damiano Brigo and Naoufel El-Bachir)

Click here to download a PDF version of this paper from the

ICMA Centre.

We

develop and test a fast and accurate semi-analytical formula for single-name

default swaptions in the context of the shifted square root jump diffusion

(SSRJD) default intensity model. The formula consists of a decomposition of an

option on a summation of survival probabilities in a summation of options on

the underlying survival probabilities, where the strike for each option is

adjusted.

Back to scientific/academic works

Back to top

- Candidate

Market Models and the Calibrated CIR++ Stochastic Intensity Model for

Credit Default Swap Options and Callable Floaters (by Damiano Brigo)

In: Proceedings of the 4-th ICS Conference on

Statistical Finance, Tokyo, March 18-19, 2004.

Click here to download a PDF version of this

paper

We

consider the standard Credit Default Swap (CDS) payoff and some alternative

approximated versions, stemming from different conventions on the premium and

protection legs. We consider standard running CDS (RCDS), upfront CDS and

postponed-payments running CDS (PRCDS). Each different definition implies a

different definition of forward CDS rate, which we consider with some detail.

We introduce defaultable floating rate notes (FRN)'s. We point out which kind

of CDS payoff produces a forward CDS rate that is equal to the fair spread in

the considered defaultable FRN. An approximated equivalence between CDS's and

defaultable FRN's is established, which allows to view CDS options as

structurally similar to the optional component in defaultable callable notes.

We briefly investigate the possibility to express

forward CDS rates in terms of some basic rates and discuss a possible analogy

with the LIBOR and swap default-free models. Finally, we discuss the change of

numeraire approach for deriving a Black-like formula for CDS options or, equivalently,

defaultable callable FRN's. We also introduce an analytical formula for CDS

option prices under the CDS-calibrated SSRD stochastic-intensity model, and

discuss the impact of the different CIR++ dynamics parameters on the related

CDS options implied volatilities. Hints on possible methods for smile modeling

of CDS options are given for possible future developments of the CDS option

market.

Back to scientific/academic works

Back to top

- Constant Maturity Credit Default Swap

Pricing with Market Models (by Damiano Brigo)

To download a PDF copy of this paper go to

the SSRN website clicking here, or

download the paper directly from here.

In this work we derive an approximated

no-arbitrage market valuation formula for Constant Maturity Credit Default

Swaps (CMCDS). We move from the CDS options market model in Brigo (2004), and

derive a formula for CMCDS that is the analogous of the formula for constant

maturity swaps in the default free swap market under the LIBOR market model. A

``convexity adjustment"-like correction is present in the related formula.

Without such correction, or with zero correlations, the formula returns an

obvious deterministic-credit-spread expression for the CMCDS price. To obtain

the result we derive a joint dynamics of forward CDS rates under a single

pricing measure, as in Brigo (2004). Numerical examples of the ``convexity

adjustment" impact complete the paper.

Back to scientific/academic works

Back to top

- CDS

Market Formulas and Models (by Damiano Brigo and Massimo Morini)

, Invited presentation at

XVIII Warwick Option Conference (2005). Click here

to download this paper. .

In this work we analyze market payoffs of Credit

Default Swaps (CDS) and we derive rigorous standard market formulas for pricing

options on CDS. Formulas are based on modelling CDS spreads which are

consistent with simple market payoffs, and we introduce a subfiltration

structure allowing all measures to be equivalent to the risk neutral measure.

Then we investigate market CDS spreads through change of measure and consider

possible choices of rates for modelling a complete term structure of CDS

spreads. We also consider approximations and apply them to pricing of specific

market contracts. Results are derived in a probabilistic framework similar to

that of Jamshidian (2004).

Back to scientific/academic works

Back to top

- Credit Default Swap Calibration and

Equity Swap Valuation under Counterparty Risk with a Tractable Structural

Model (by

Damiano Brigo and Marco Tarenghi)

Presented at the IASTED conference at MIT, November

2004. To download a PDF copy of this paper go to the SSRN website

clicking here,

or download the paper directly from here.

In this paper we develop a tractable structural

model with analytical default probabilities depending on some dynamics

parameters, and we show how to calibrate the model using a chosen number of

Credit Default Swap (CDS) market quotes. We essentially show how to use

structural models with a calibration capability that is typical of the much

more tractable credit-spread based intensity models. We apply the resulting

AT1P structural model to a concrete calibration case and observe what happens

to the calibrated dynamics when the CDS-implied credit quality deteriorates as

the firm approaches default. Finally we provide a typical example of a case